The Evolution of Voice Bots: Advances in Speech-to-Text and Text-to-Speech Technologies 2025 and Language coverage

Executive Summary

The voice bot landscape has transformed dramatically between 2023 and 2025, with significant advancements in speech-to-text (STT) and text-to-speech (TTS) technologies. This report examines these developments, focusing on background noise reduction, latency improvements, neural and generative voices, and the emergence of specialized providers like Eleven Labs and Deepgram. We'll also explore how Enterprise Bot's platform orchestrates these technologies to deliver comprehensive voice bot solutions for businesses seeking to enhance customer experiences through conversational AI.

Introduction to Modern Voice Bot Technology

Voice bots have evolved from simple command-response systems to sophisticated conversational AI assistants. The technological backbone of these systems—speech-to-text and text-to-speech capabilities—has undergone revolutionary changes in recent years, enabling more natural, responsive, and effective voice interactions.

Enterprise buyers now face a complex marketplace with specialized providers offering improvements in specific areas. This fragmentation creates both opportunities and challenges. While organizations can access best-in-class technologies for particular languages or use cases, integrating these disparate solutions requires expertise and technical resources.

Recent Advances in Speech-to-Text Technology

Background Noise Reduction

One of the most significant barriers to effective voice bot deployment has traditionally been environmental noise interference. Recent advances in this area include:

- Multi-channel noise suppression algorithms that can differentiate between speech and various types of background noise in real time

- Adaptive noise filtering that adjusts based on detected ambient conditions

- Deep learning models specifically trained to preserve speech clarity while eliminating diverse noise types

- Context-aware processing that can maintain intelligibility even in challenging acoustic environments

Google Speech-to-Text has made substantial strides in this area, implementing neural network architectures that can process audio signals in ways that mimic human auditory perception. Their enhanced models can now effectively isolate speech even in environments with music, cross-talk, or machinery sounds.

Deepgram's specialized audio intelligence platform has similarly focused on noise resilience, developing models that perform exceptionally well in industrial settings where traditional speech recognition would fail.

Latency Improvements

Low latency has become a critical differentiator for speech-to-text software, particularly for real-time applications. Notable developments include:

- Streaming recognition capabilities that begin processing audio before utterances are complete

- Edge deployment options that reduce round-trip time to cloud servers

- Optimized model architectures specifically designed for speed without sacrificing accuracy

- Predictive processing that anticipates likely speech patterns

These improvements have reduced average response times from 500-700ms in 2023 to under 200ms in many commercial speech-to-text apps by 2025, approaching the threshold where users perceive interactions as truly instantaneous.

Language Support and Accuracy

The breadth and depth of language support has expanded dramatically:

- Increased language coverage with specialized models for previously underserved languages and dialects

- Domain-specific training for industry terminology and jargon

- Accent and dialect recognition improvements that better serve diverse global user bases

- Code-switching handling that can process conversations containing multiple languages

Speech-to-text AI now regularly achieves accuracy rates above 95% for most major languages in optimal conditions, with continuing improvements for challenging scenarios like heavily accented speech or uncommon dialects.

Text-to-Speech Revolution

Neural and Generative Voices

Perhaps the most transformative development in voice bot technology has been the emergence of neural and generative TTS systems:

- Neural voice synthesis using deep learning to create more natural-sounding speech

- Emotional tone variation that can express different moods appropriate to content

- Prosody control allowing for emphasis, pauses, and intonation that mimics natural speech patterns

- Voice cloning capabilities that can generate new speech in the style of a specific voice with minimal sample data

Eleven Labs has been particularly innovative in this space, offering voice cloning technology that can generate remarkably natural speech with emotional range previously unattainable. Their 2024 release demonstrated unprecedented control over vocal characteristics, allowing for subtle adjustments to convey empathy, enthusiasm, or concern as appropriate to the conversation context.

Multilingual Capabilities

Text-to-speech software has become increasingly sophisticated in handling multiple languages:

- Cross-lingual voice transfer allowing a single voice identity to speak multiple languages naturally

- Pronunciation refinement for non-native words within speech

- Culture-specific speech patterns that reflect regional communication styles

- Natural code-switching between languages within the same speech stream

These capabilities are particularly valuable for global enterprises requiring consistent brand voice across markets while respecting linguistic and cultural nuances.

The Specialized Provider Landscape

Eleven Labs

Eleven Labs has emerged as a specialist in ultra-realistic voice generation, focusing on:

- Emotion-rich voice synthesis with unprecedented naturalness

- Real-time voice customization features

- Voice preservation technology that maintains consistency across various content types

- Voice design tools for creating unique synthetic voices

Their technology has found particular application in content creation, audiobook production, and premium customer service experiences where voice quality significantly impacts user perception.

Deepgram

Deepgram has established itself as a leader in audio intelligence through:

- Purpose-built speech models for specific industries and use cases

- Noise-robust recognition even in challenging environments

- Speaker diarization capabilities that can distinguish between multiple speakers

- Intent recognition integrated directly into the speech processing pipeline

Their API-first approach and specialized models for industries like healthcare, financial services, and telecommunications have made them a preferred choice for enterprises with specific compliance and accuracy requirements.

Other Notable Players

The speech technology ecosystem has expanded to include numerous specialized providers:

- AssemblyAI offering advanced features like summarization and content moderation

- Speechmatics focusing on accuracy across diverse accents and demographics

- Whisper by OpenAI providing open-source speech recognition with multilingual capabilities

- Vocalis specializing in voice biomarkers for healthcare applications

This diversification reflects the maturing market and increasing specialization of voice technologies for specific use cases and requirements.

Integration Challenges for Enterprise

The proliferation of specialized providers creates several challenges for enterprises:

- Fragmented vendor relationships requiring multiple contracts and integrations

- Inconsistent user experiences when different technologies don't seamlessly work together

- Complex technical integration requiring specialized expertise

- Difficulty optimizing for specific use cases that might benefit from different providers

- Ongoing management overhead of multiple systems

These challenges have created demand for orchestration platforms that can unify disparate voice technologies into coherent solutions.

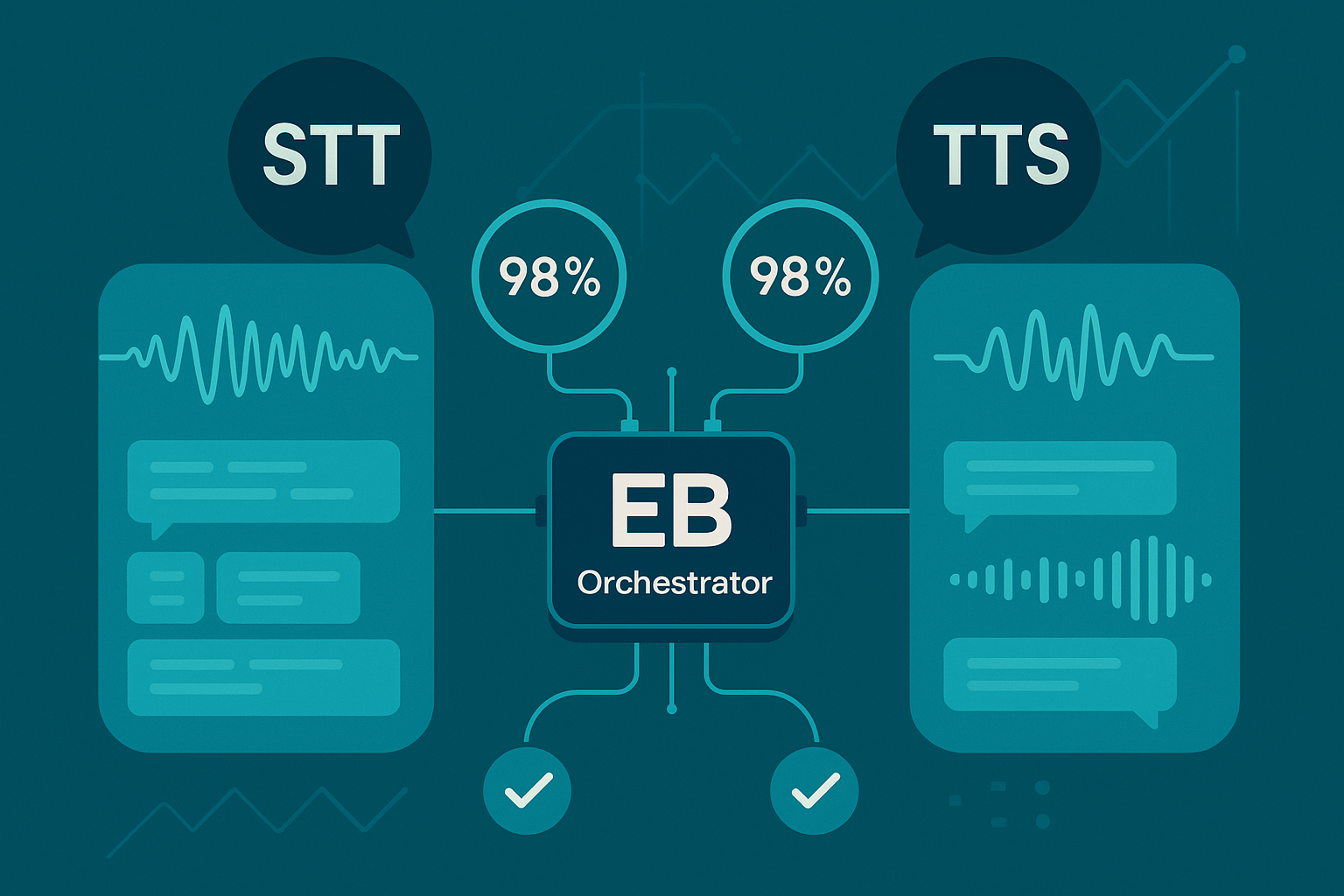

Enterprise Bot as Technology Orchestrator

Enterprise Bot has positioned itself as a central orchestrator in this complex ecosystem, offering a platform approach that integrates best-of-breed voice technologies.

Unified Platform Approach

The Enterprise Bot platform provides:

- Single integration point for multiple STT and TTS technologies

- Consistent API regardless of underlying providers

- Centralized management console for all voice interactions

- Standardized analytics across channels and technologies

- Unified security and compliance framework

This approach significantly reduces technical complexity while allowing enterprises to leverage specialized capabilities from multiple providers.

Dynamic Provider Selection

One of Enterprise Bot's key innovations is its ability to dynamically select optimal speech processing technologies based on situational factors:

- Language-specific routing to providers with the best support for particular languages

- Use case optimization matching technology to task requirements

- Quality-based selection that can prioritize accuracy or speed as needed

- Cost optimization balancing performance needs with budget considerations

- Fallback handling for service continuity during outages

This capability allows enterprises to provide consistent experiences while leveraging the best available technology for each interaction.

Conversational Intelligence Layer

Beyond simple technology integration, Enterprise Bot adds value through:

- Context preservation across multiple turns of conversation

- Intent recognition that understands user needs beyond literal words

- Sentiment analysis to detect and respond to emotional cues

- Conversation flow management that guides interactions toward resolution

- Learning and optimization based on interaction patterns

This intelligence layer transforms raw speech technology into true conversational AI voice bot solutions that can effectively engage customers and address their needs.

Deployment Flexibility

Enterprise Bot's platform accommodates diverse enterprise requirements through:

- Cloud, hybrid, and on-premises deployment options

- Integration with existing contact center infrastructure

- Support for multiple channels (phone, web, mobile, smart speakers)

- Custom branding and voice design

- Enterprise-grade security and compliance features

This flexibility ensures that voice bot implementations align with organizational infrastructure and requirements while minimizing disruption.

Future Directions and Emerging Trends

Multimodal Integration

The next frontier for voice bot technology involves integration with other modalities:

- Visual context awareness combining voice with camera input

- Screen sharing capabilities during voice interactions

- Gesture recognition complementing speech commands

Enterprise Bot is already working toward these capabilities through partnerships with computer vision.

Advanced Personalization

Personalization is evolving beyond basic customer recognition:

- Emotional intelligence that adapts tone and approach based on detected user state

- Conversation style matching that mirrors user communication preferences

- Learning from previous interactions to anticipate needs and preferences

- Proactive engagement based on predicted user requirements

These capabilities promise to make voice bot interactions increasingly indistinguishable from human conversations.

Conclusion: The Orchestrated Future of Voice Bots

The voice bot landscape has undergone remarkable transformation from 2023 to 2025, with specialized providers pushing the boundaries of what's possible in speech-to-text and text-to-speech technology. Background noise reduction, latency improvements, and neural voice generation have made voice interactions more natural and effective than ever before.

However, this specialization has created complexity for enterprises seeking to implement voice bot solutions. The true innovation now lies not just in individual technologies but in platforms that can orchestrate these capabilities into coherent, effective solutions.

Enterprise Bot stands at the forefront of this orchestration approach, enabling organizations to leverage best-in-class speech technologies while maintaining a unified, manageable platform. By dynamically selecting optimal providers for each interaction while adding an intelligent conversational layer, Enterprise Bot delivers voice bot solutions that combine technological excellence with practical business value.

For enterprise buyers navigating this evolving landscape, the platform approach offers a path to implementation that balances innovation with integration simplicity, allowing organizations to deploy sophisticated conversational AI voice bots without becoming experts in every underlying technology.

As the market continues to evolve, this orchestration capability will become increasingly valuable, enabling enterprises to continuously incorporate new advances while maintaining consistent, high-quality customer experiences across all interactions.

Language Coverage (Enterprise Bot 2025)

| Language / Locale | BCP-47 Code | STT | TTS | Multilingual cross talk support |

| Afrikaans (South Africa) | af-ZA | Real time | regular TTS | |

| Arabic | ar-XA | Real time | Generative Voices | |

| Arabic (Gulf) | ar-AE | Real time | Generative Voices | |

| Basque (Spain) | eu-ES | Real time | regular TTS | |

| Bengali (India) | bn-IN | Real time | regular TTS | |

| Bulgarian (Bulgaria) | bg-BG | Real time | Generative Voices | |

| Catalan (Spain) | ca-ES | Real time | regular TTS | |

| Chinese (Cantonese) | yue-CN | Real time | Generative Voices | |

| Chinese (Mandarin, Simp.) | cmn-CN | Real time | Generative Voices | |

| Czech (Czech Republic) | cs-CZ | Real time | Generative Voices | |

| Danish (Denmark) | da-DK | Real time | Generative Voices | |

| Dutch (Netherlands) | nl-NL | Real time | Generative Voices | Yes |

| English (Australian) | en-AU | Real time | Generative Voices | |

| English (British) | en-GB | Real time | Generative Voices | Yes |

| English (Indian) | en-IN | Real time | Generative Voices | Yes |

| English (US) | en-US | Real time | Generative Voices | Yes |

| Finnish (Finland) | fi-FI | Real time | Generative Voices | |

| French (Canadian) | fr-CA | Real time | Generative Voices | |

| French (France) | fr-FR | Real time | Generative Voices | Yes |

| German (Germany) | de-DE | Real time | Generative Voices | Yes |

| German (Swiss) | de-CH | Real time | regular TTS | |

| Greek (Greece) | el-GR | Real time | Generative Voices | |

| Hungarian | hu-HU | Real time | Generative Voices | |

| Hindi (India) | hi-IN | Real time | Generative Voices | Yes |

| Italian (Italy) | it-IT | Real time | Generative Voices | Yes |

| Indonesian | id-ID | Real time | Generative Voices | |

| Japanese (Japan) | ja-JP | Real time | Generative Voices | Yes |

| Korean (South Korea) | ko-KR | Real time | Generative Voices | |

| Latvia | lv-LV | Real time | regular TTS | |

| Lithuania | lt-LT | Real time | regular TTS | |

| Malay | ms-MY | Real time | Generative Voices | |

| Norwegian (Bokmål, Norway) | nb-NO | Real time | Generative Voices | |

| Polish (Poland) | pl-PL | Real time | Generative Voices | |

| Portuguese (Brazilian) | pt-BR | Real time | Generative Voices | |

| Portuguese (Portugal) | pt-PT | Real time | Generative Voices | |

| Romanian | ro-RO | Real time | Generative Voices | |

| Russian (Russia) | ru-RU | Real time | Generative Voices | Yes |

| Slovak | sk-SK | Real time | Generative Voices | |

| Spanish (Mexican) | es-MX | Real time | Generative Voices | |

| Spanish (Spain) | es-ES | Real time | Generative Voices | Yes |

| Spanish (US) | es-US | Real time | Generative Voices | |

| Swedish (Sweden) | sv-SE | Real time | Generative Voices | |

| Thai | th-TH | Real time | Generative Voices | |

| Turkish (Turkey) | tr-TR | Real time | Generative Voices | |

| Ukrainian | uk-UA | Real time | Generative Voices | |

| Vietnamese | vi-VN | Real time | Generative Voices |

Note: BCP-47 codes are standard identifiers for languages.

*Multilingual Crosstalk Support lets you transcribe speakers speaking different languages in the same conversation

*Please note that languages not marked Multilingual Crosstalk Support can still be supported for language detection but not supported for switching languages mid sentence.